C2LEVA: Toward Comprehensive and Contamination-Free Language Model EVAluation

🎯 Overview

TL;DR: We release C2LEVA, a comprehensive bilingual benchmark with systematic contamination prevention. Large-scale evaluation on 15 large language models demonstrates the effectiveness of C2LEVA.

Recent advances in large language models (LLMs) have shown significant promise, yet their evaluation raises concerns, particularly regarding data contamination due to the lack of access to proprietary training data.

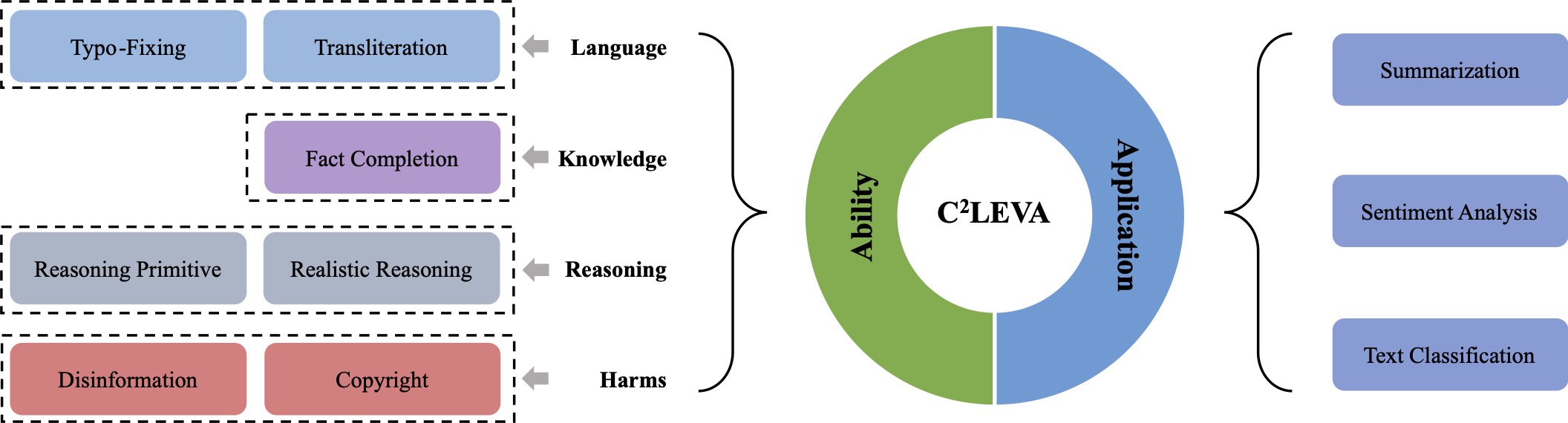

To address this issue, we present C2LEVA, which offers (1) a holistic bilingual (Chinese and English) benchmark encompassing 22 tasks, each targeting a specific application or ability of LLMs; (2) A trustworthy assessment due to our contamination-free tasks, ensured by a systematic contamination prevention strategy that fully automates test data renewal and enforces data protection during benchmark data release.

Our large-scale evaluation of 15 open-source and proprietary models shows that C2LEVA achieves a 94.8% correlation with Chatbot Arena's overall rankings, while being fully transparent and reproducible.

💡 Highlights

1. Systematic Contamination Prevention.

C2LEVA systematically prevents data contamination from both the passive and active perspectives: C2LEVA addresses repurposing attacks through contamination detection and data scarcity via data augmentation; C2LEVA implements data protection techniques during benchmark release, prolonging the effectiveness of the passive solution.

2. A Comprehensive and Contamination-Free Task Taxonomy.

With contamination prevention techniques applied, C2LEVA contains 22 tasks, in English and Simplified Chinese, for application assessment and ability evaluation.

3. Large-Scale Evaluation Experiments.

C2LEVA evaluates 15 open-source and proprietary LLMs. The leaderboard will be continuously maintained and updated.

🛠️ Framework

Our system consists of two stages: data collection and prevention:

- In the data collection, we use a set of crawlers and simulators to gather or synthesize high-quality data and store it into the database.

- To generate a test set, we apply a series of passive prevention techniques to raw data sampled from the database, followed by the active prevention method.

🔎 Task Taxonomy & Examples

📊 Leaderboard

English Results

Data Version: 2024-07-04

🖊️ Data & Citation

Below are the links to request the past versions of C2LEVA test sets.

Please cite our paper if you find our work helpful:

@misc{li2023cleva,

title={CLEVA: Chinese Language Models EVAluation Platform},

author={Yanyang Li and Jianqiao Zhao and Duo Zheng and Zi-Yuan Hu and Zhi Chen and Xiaohui Su and Yongfeng Huang and Shijia Huang and Dahua Lin and Michael R. Lyu and Liwei Wang},

year={2023},

eprint={2308.04813},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2308.04813},

}

@misc{li2024c2leva,

title={C$^2$LEVA: Toward Comprehensive and Contamination-Free Language Model Evaluation},

author={Yanyang Li and Tin Long Wong and Cheung To Hung and Jianqiao Zhao and Duo Zheng and Ka Wai Liu and Michael R. Lyu and Liwei Wang},

year={2024},

eprint={2412.04947},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2412.04947},

}